3 minutes

Running CycleGAN programmatically

CycleGAN is a fantastic model but it is difficult to modify official implementation. This post explains how to run one of the four models (i.e. DiscriminatorA, GeneratorA, DiscriminatorB or GeneratorB) in isolation and directly from python.

Introduction

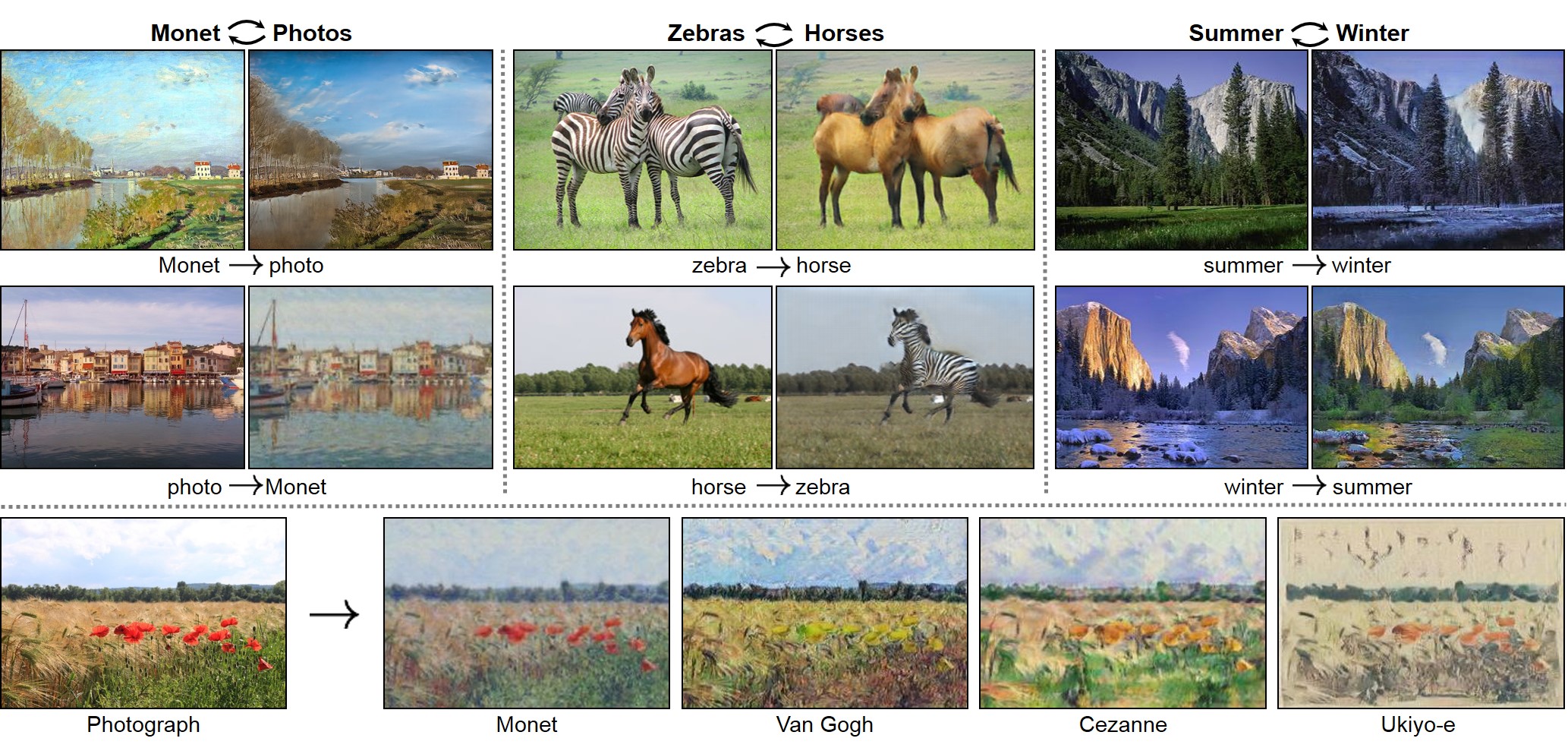

CycleGAN is a fantastic model allows us to do style transfer without pairing images pixel-to-pixel. Here are the examples from the project page:

I’m sure if you found this post, you already know how it works. Basically, it uses a normal generator-adversarial model to learn a style transfer between one domain and another. However, it avoids the mode-collapse issue by simultaneously learning how to undo the style transfer–thus, we ensure that the fake image bears resemblance to the original.

Unfortunately, this project suffers from a number of technical debt issues, specifically that of “pipeline jungle” and “configuration debt” (see Table 1-1 from Thoughtful Machine Learning with Python). The only obvious way to use it is with the command line interface. Even though it is written in pytorch, it is very difficult to invoke from python. (Besides calling it as a subprocess, of course, which is a terrible solution.)

For myself, I wanted to run one of the generators I had trained inside of a Flask web app that predict what someone might look like in drag. Since the web app is written in python as well, I wanted to interface with it directly. So I started chopping up the source code.

This was harder than expected because:

- The model class cannot be instantiated without constructing a complicated

optobject - The preprocessing function cannot be generated without the same

optobject - The image has to be post-processed, which is non-obvious

- One has to use a custom Torch dataset to use a PIL image (which was my use-case)

- Cycle GAN is not pip-installable, making it difficult to list as a dependency

My solution to the last problem is to include the PyTorch-cycleGAN code in the same directory as a submodule. This can be done like so:

git submodule add https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

Don’t forget to enable and synchronize the submodules in the future.

git submodule init && git submodule update

Then, here is the code:

Available as a gist here.

Miscellaneous Thoughts

(As an aside, if I had to do this from scratch, I would probably start with cy-xu’s implementation, which is less code.

608 Words

2020-01-08 19:00